I am a Senior Robotics/AI Engineer working at Figure AI. I work in the controls team developing control algorithms for the humanoid robot fleet, Figure 02. Specifically, I design and deploy controlled behaviors to enhance robot capabilities when interacting with the environment. I also work in robustifying the control stack and in designing machine learning algorithms to speed up various phases a robot bring-up process.

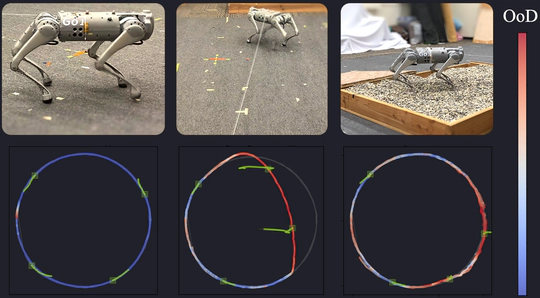

Before my current position, I was a postdoctoral research fellow at the Hybrid Systems Lab, University of California Berkeley, working with Prof. Claire J. Tomlin, where I conducted research in preventing unsafe behavior in autonomous systems that navigate through real-world unstructured environments using out-of-distribution (OoD) run-time monitors.

I pursued my PhD in Computer Science at Prof. Stefan Schaal’s robot lab at the Max Planck Institute for Intelligent Systems, in Tübingen (Germany) and the University of Southern California. I was advised by Prof. Sebastian Trimpe and co-advised by Prof. Philipp Hennig. During my PhD, I collaborated with Prof. Jeannette Bohg, Prof. Angela P. Schoellig, Prof. Andreas Krause.

In 2019, I was a visiting researcher at the Computational and Biological Learning Lab, at University of Cambridge, UK, working with Prof. José Miguel Hernández-Lobato in Bayesian optimization. I also was a PhD intern at Meta AI (formerly FAIR) in California, working with Prof. Roberto Calandra in model-based reinforcement learning.

See more

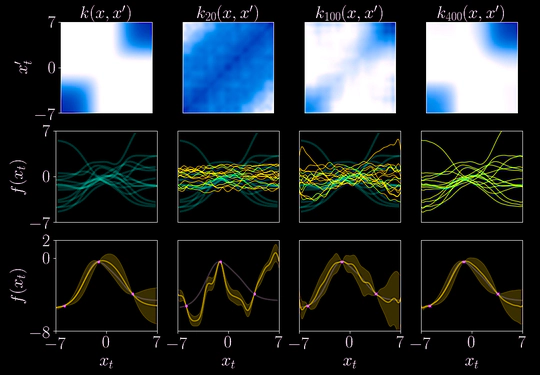

I also explore data-efficiency in model-based reinforcement learning by informing the probabilistic dynamics model with existing expert knowledge (e.g., physics models, high-fidelity simulators). In particular, I study Gaussian process state-space models and Bayesian networks.

At a high level, I am highly passionate about scaling theoretically sound ideas to real systems. During my academic journey I acquired hands-on expertise with quadrupeds, hexapods, bipeds and robot manipulators that navigate and interact in the real world.

Contact: alonso [dot] marcovalle [at] figure [dot] ai

- Humanoid Robot Locomotion

- Model-based predictive control

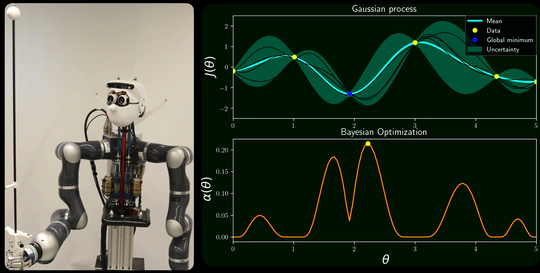

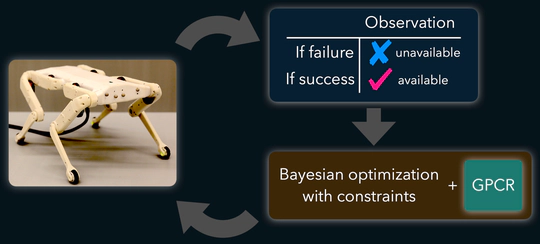

- Bayesian Optimization and Gaussian Processes

- Model-based Reinforcement Learning

Postdoctoral Research Fellow in Machine Learning and Robotics, 2023

University of California Berkeley

PhD in Robotics and Machine Learning, 2020

Max Planck Institute for Intelligent Systems and University of Tübingen, Germany

MSc in Artificial Intelligence, 2015

Polytechnical University of Catalonia, Spain

News

[Nov 2024]

I was in the news! Two renowned Spanish newspapers, Lanza and ABC, published articles about my academic and professional trajectory, focusing on my life and work on humanoid robots in Silicon Valley.

[Jan 2024]

Excited to share that I just joined Figure AI as a Senior Robotics/AI Engineer, working on the controls team!

[Dec 2023]

Our paper “Out of Distribution Detection via Domain-Informed Gaussian Process State Space Models” was presented at the 62nd IEEE Conference on Decision and Control (CDC), in Singapore.

Older

[Jul 2023]

I presented our current progress on “Out of Distribution Detection via Simulation-Informed Gaussian Process State Space Models” at Prof. Jeannette Bohg’s group, the Interactive Perception and Robot Learning Lab at Stanford.

[Jul 2023]

Our paper “Out of Distribution Detection via Domain-Informed Gaussian Process State Space Models” has been accepted to CDC, held at Marina Bay Sands, in Singapore.

[Jun 2023]

I presented a poster at the the Safe Aviation Autonomy Annual Meeting, under the NASA University Leadership Initiative (ULI), held at Stanford.

[Jun 2023]

I presented my work on “Online out-of-distribution detection via simulation-informed deep Gaussian process state-space models” at the DARPA Assured Neuro Symbolic Learning and Reasoning (ANSR) campus visit, held at UC Berkeley. Slides.

[May 2023]

I am part of the best paper award committee for 5th Learning for Dynamics and Control Conference (L4DC), held at the University of Pennsylvania.

[Mar 2023]

We’ve submitted our paper “Out of Distribution Detection via Domain-Informed Gaussian Process State Space Models” to CDC, currently under review!

[Jul 2022]

Our paper on Koopman-based Ljapunov functions has been accepted at the 61st IEEE Conference on Decision and Control (Mexico)!!

[Jun 2022]

I presented a poster at the Safe Aviation Autonomy NASA ULI annual meeting, held at Stanford.

[May 2022]

I am serving as part of the best paper award committee for 4th Learning for Dynamics and Control Conference (L4DC), held at Stanford.

[Nov 2021]

I was invited as a guest lecturer at UC San Diego to talk about Bayesian optimization. This talk is part of a seminar series organized by Prof. Sylvia Herbert.

[Sep 2021]

I have moved in to Berkeley! I’ve joined the Hybrid Systems Lab as a postdoc, working with Prof. Claire Tomlin on model-based RL and kernel methods.

[Apr 2021]

I have been awarded the Rafael del Pino Excellence Fellowship awarded to Spanish researchers with an outstanding academic path (1% acceptance rate).

[Feb 2021]

I have been invited to give a talk (remotely) at the Learning for Dynamics and Control seminar at UC Berkeley, jointly organized by Prof. Koushil Sreenathat, Prof. Ben Recht and Prof. Francesco Borrelli’s groups.

[Jul 2020]

I have defended my PhD at the University of Tübingen, Germany! My thesis entitled “Bayesian Optimization in Robot Learning: Automatic Controller Tuning and Sample-Efficient Methods” can be found here.

[Jul 2020]

I have been invited to present (remotely) my PhD thesis at UC Berkeley, at Prof. Claire Tomlin’s group.

[Dec 2019]

I have presented at Facebook Artificial Intelligence Research (FAIR) the work I did during my intership.

[Sep 2019]

I have moved in to California for an internship at Facebook Artificial Intelligence Research (FAIR), working in model-based RL with Roberto Calandra.

[Apr 2019]

I have presented my ongoing work with Prof. José Miguel Hernández-Lobato at the Computational and Biological Learning Lab, University of Cambridge, UK.

[Mar 2019]

I have presented a poster at the Div-f Conference, at the University of Cambridge, UK.

[Mar 2019]

I have moved in to Cambridge, UK for a research stay at the Computational and Biological Learning Lab, working with Prof. José Miguel Hernández-Lobato.

[Jan 2019]

Our journal paper “Data-efficient Auto-tuning with Bayesian Optimization: An Industrial Control Study” has been published!

Projects

Publications

Up-to-date with my google scholar profile